Social media isn’t safe for teens

TikTok’s new rules are not enough to protect minors

Internet safety and privacy issues such as cyberbullying, grooming and sharing personal information impact minors in unique ways, yet most social media sites refuse to implement sweeping, specific protections for this age group.

February 2, 2021

Some young TikTok users may have noticed recent changes to their account’s settings. In January, the app implemented a few new safety features for users who are under 18. For individuals between 13 and 15, accounts are now set to private by default, the “suggest your account to others” setting is turned off and commenting options are limited to either “friends only” or “no one.” The Duet and Stitch features, which allow people to incorporate other users’ videos into their own, are not available for this age group, and other users will not be able to download their videos. For 16 and 17 year old users, the Dueting and Stitching feature on their videos will default to “friends,” and the download option on their videos will default to off. The company, whose US base is largely made up of young people, was praised by multiple child safety organizations like the National PTA and Family Online Safety Institute. However, the moves are baby steps regarding an issue that needs radical, immediate change.

TikTok’s decision to put new protections in place comes after a deal they made with the Federal Trade Commission to settle charges regarding their violations of the Children’s Online Privacy Protection Act (COPPA). The company was allegedly collecting information from children under 13, including email addresses, videos and names without parental consent while doing nothing about the high number of child predators messaging kids on the app. They agreed to make changes to their privacy practices but have been criticized by children’s groups who claim they did not carry out those changes after the settlement. Considering this history of avoiding real action in favor of PR tactics, people should be wary of TikTok’s recent claims that they are committed to youth safety on their app.

To many teens, cries of the “dangers of social media” sound like alarmist hype from older generations who are stuck in their ways. However, as the younger generation who grew up with the internet begins to show the dangerous long term impacts it can have, more people from all generations are starting to decry social media. Just as important as the long term effects of phone addiction, short attention spans and damaged mental health from social media are the short term concerns about privacy and safety.

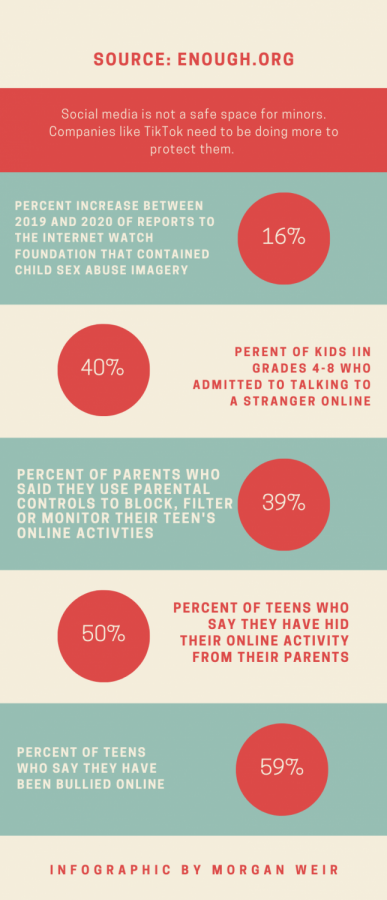

These short term dangers of social media impact teens in unique ways. Teens who overshare on social media or post inappropriate/controversial content have to worry not just about social backlash but about their future college and career. Seventy percent of employers look at candidates’ social media during the hiring process and 29% of colleges look at applicants’ social media during the admissions process. Doxing, a form of cyberbullying that involves publicly sharing private information, has become increasingly common among teens on social media and poses both safety and privacy risks. In fact, cyberbullying in general has become the most common form of harassment among teens with 59% saying they have been a victim of cyberbullying despite social media sites’ claims that they are working to curb the issue. Because of this, minors need special protection of their privacy and safety.

TikTok is a multibillion dollar corporation with a large cultural stake, and their choice to make even just a few changes for minors will likely lead to some other changes in social media policies and norms. However, the new safety features are not a definitive solution for the lack of safety and privacy for minors on social media, nor do they address the larger issue, which is that young people’s voices are not heard in the digital space. They are viewed as another user to profit off of rather than a unique cohort that needs special protections. Social media in general is a largely underregulated landscape where teens fade into the background, even though they make up a significant chunk of the demographics (and the profit).

Because teens are such a large part of the base, social media corporations have a responsibility to protect them. Ideally, parents would be the ones championing their children’s safety, but studies show that only about 50% of parents with kids between 5-15 years old use parental controls, and teens in the digital age are able to easily find ways around controls, as shown by the common phenomenon of Finstas, or fake Instagram accounts, that teens make to hide their online activity from their parents. Because their brains are still developing, teens themselves tend to make decisions based on emotions and impulse, which means it’s hard for them to make safe choices on social media 100% of the time. Because of this, the burden of protection must fall on social media sites. Companies like TikTok, Snapchat and Instagram make much of their money off of teens, and they have the power and resources to make sure those teens are safe when using their platforms.

In order to show that they truly value their teenage users, TikTok and other social media sites need to implement more aggressive protections and hire people to see those protections through. Requiring that users be over the age of 13 is nothing more than an attempt to shift liability unless moderators are actively seeking out accounts that they suspect don’t meet age guidelines and requiring proof of age, like a driver’s license or an online ID.

Ultimately, if social media sites really want to protect minors, they need to protect everyone’s data. With TikTok’s new rules in place, and the app doing little, if anything, to verify ages, there will undoubtedly be a surge of users lying about their age. COPPA mandates that sites obtain parental permission before collecting personal information from users under 13, and some states like California have laws that extend those protections to anyone under 16. By making it easy and enticing for minors to lie about their age in order to avoid the new privacy features, TikTok has essentially created a way to collect (and sell) minors’ data without having to ask permission or risk being fined. Until the app is protecting everyone’s data and not collecting personal information, minors will not be fully protected.

TikTok’s new safety features are good first steps, but they don’t do enough to confront the rampant dangers that teens face online. Social media won’t be a safe place for teens until the corporations that profit off of them make the effort to protect them.